In today’s fast-paced business environment, the need to connect and synchronize cloud, SaaS, and on-premise systems is key to drive real-time insights and operational efficiency. Crosser’s low-code platform makes this integration seamless by enabling real-time data processing across multiple environments. With hybrid capabilities that span the cloud and on-premise systems, Crosser allows businesses to process data in the cloud while taking necessary actions locally.

In this article, we explore key use cases that demonstrate how Crosser supports cloud-to-cloud and cloud-to-on-premise use cases.

Key Use Cases

Connect Your SaaS Services

Many businesses rely on a combination of SaaS platforms for their daily operations, and keeping those platforms in sync is a critical challenge. Whether it’s making sure data flows seamlessly between systems or transforming data for compatibility, the integration process can be complex.

With Crosser, businesses can simplify this process. The platform allows you to easily connect platforms like IBM Maximo and Microsoft Dynamics, enabling data to move automatically between them. For example, a Flow can be set up to transfer data from one platform to another on a defined schedule, like hourly updates. You don’t need to manually handle data mapping or synchronization. Instead, Crosser’s low-code design tool lets you automate the entire process, ensuring data consistency without the need for constant oversight.

Crosser Example Flow | Connect your SaaS Services

Monitor Your SaaS Services

Ensuring your cloud-based services are always up and running is crucial for maintaining operational efficiency. A failure in a critical service can lead to downtime and lost productivity, so it’s important to be able to monitor these services continuously.

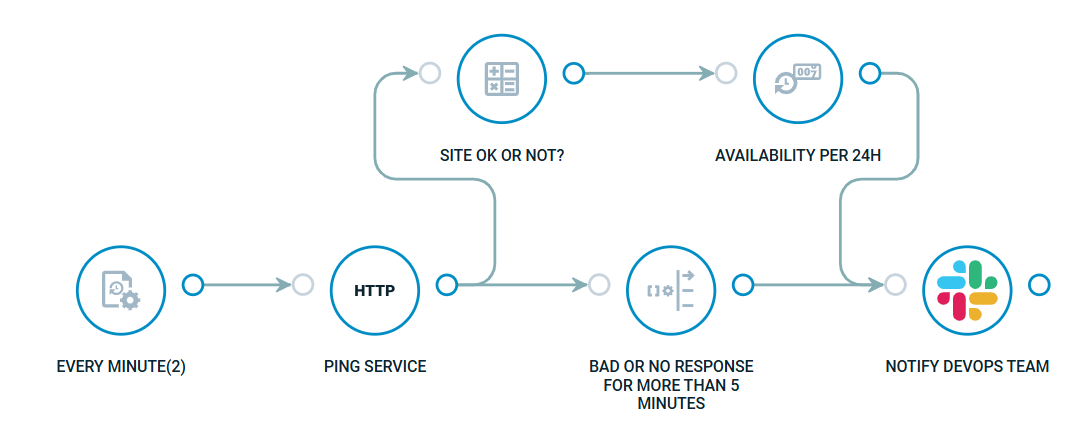

Crosser helps businesses by enabling them to monitor their cloud services via HTTP endpoints. For instance, you can set up a Flow to ping a service every minute to check its status. If there’s an issue—like a timeout or a failure to respond—Crosser can notify your dev-ops team via Slack or another notification service. Not only can you receive real-time alerts, but you can also set up regular reports on service uptime to monitor availability trends. This functionality can be applied to services, databases, or any system that needs to be closely monitored.

Crosser Example Flow | Monitor your Services

Operate on Your Cloud Databases with SQL

Cloud data is essential for analytics and decision-making, but keeping that data properly organized and maintained requires regular operations. Whether it’s cleaning up tables or moving data between systems, those tasks need to be automated for efficiency.

Crosser enables users to transfer and manipulate data across cloud systems while also allowing them to execute SQL queries as part of their Flows. For example, you can automate the transfer of records from a MongoDB database to a Snowflake data warehouse. And beyond simple data movement, Crosser gives you the flexibility to perform SQL-based tasks such as running queries for maintenance or applying complex data transformations. The low-code environment allows you to focus on the logic while reducing the amount of manual coding needed.

Operate on Your Cloud Data with Custom Code

Sometimes, businesses need more than what standard data transformations and SQL queries can offer. For complex processing, custom code may be required to execute specific algorithms or business logic.

Crosser lets you integrate custom code directly into your Flows, whether it's in C#, Python, or JavaScript. For example, you could set up a Flow where an HTTP request triggers the execution of your custom code. The Flow can pull additional data from a database, apply your custom algorithm, and return results to the client via the HTTP response. This approach gives you the flexibility of traditional coding within a low-code platform, making it easier to manage complex tasks while maintaining efficiency.

Condition-Based Monitoring for Centralized Data

Large-scale sensor data is crucial for industries that rely on real-time insights from multiple locations, such as manufacturing or energy. Monitoring this data can be challenging, especially when you need to detect anomalies or patterns across a wide network.

Crosser allows businesses to monitor sensor data stored in centralized systems like AVEVA Insight. With its condition-based trigger module, Crosser makes it easy to define complex conditions that analyze data from multiple locations. For example, you can detect deviations between similar setups across locations and trigger alerts when something unusual occurs. Additionally, you can integrate machine learning models into your Flows to perform advanced analysis directly within Crosser, improving accuracy and decision-making.

Reverse Triggers from Cloud to On-Premise Systems

In industries like manufacturing, it’s often necessary to trigger actions in on-premise systems based on data processed in the cloud. Whether it’s reacting to sensor data or responding to analytics, those actions need to happen in real-time.

Crosser makes this process simple by enabling cloud-based triggers to initiate actions on-premise. For example, after processing data in the cloud, an alert can be published to the internal MQTT broker, another Flow running on a Crosser Node in the on-premise environment can then act on this alert by subscribing to the MQTT broker in the cloud Node. This could include creating work orders in an ERP system or triggering a local process. Crosser also supports alternative communication methods, such as WebSocket, ensuring that cloud data Flows securely to on-premise systems. This use case is an example of leveraging the hybrid architecture supported by the Crosser platform.

High Availability - Ensuring Reliability in the Cloud

Crosser Nodes are designed to ensure high availability and reliable performance in cloud environments. Whether deployed on platforms like Azure Container Services or AWS Elastic Kubernetes Service, these Nodes provide a robust, fault-tolerant solution that supports continuous operation. To ensure your Flows run uninterrupted, Crosser uses several strategies: Flow Configurations: Flows are stored locally on Nodes, allowing them to continue running autonomously without the need for a constant connection to the Control Center. The Control Center acts as the centralized management hub, where users design, configure, and monitor flows, ensuring streamlined deployment and oversight. Persistent Queues: For applications requiring guaranteed message delivery, Crosser supports persistent message queues that ensure data can be processed even in case of Node restarts. Managed Nodes: For businesses looking for a fully managed solution, Crosser offers managed Nodes that handle high availability and scalability without the need for additional infrastructure setup.

A Unified Solution for All Layers

Crosser’s low-code platform provides a comprehensive solution for integrating and processing data across cloud and on-premise environments. Its hybrid architecture ensures seamless integration between systems, reducing the complexity of managing multiple tools and improving operational efficiency.

For a deeper dive into these use cases and to explore how Crosser can meet your integration needs, watch the webinar recording here or contact our team of experts for personalized insights and solutions.

Crosser Weekly Live Demo